Reframing the question:Where in the trading lifecycle does reasoning create edge?

The conversation around AI trading today is loud, confident, and mostly wrong. Everyone claims to

have

“AI-powered signals,” “autonomous trading agents,” or “LLM-driven hedge funds,” yet almost none

of these

explanations survive basic engineering scrutiny. The truth is simple: LLMs are powerful,

but they

don’t work the way the hype suggests. If you want to use AI in real markets—not

simulations,

not marketing decks—you have to understand what LLMs are actually good at, what they fail at,

and

where they belong in the life cycle of a trade.

At RuggedX, our platforms—Neptune for stocks, Triton for forex, Virgil for crypto, and Orion for

options—run

in live environments where latency, risk limits, broker rules, and human psychology all collide.

We’ve wired

LLMs into these systems in dozens of places: from strategy reviews and risk coaching to

natural-language

ops, GitHub automation, and Alexa voice interfaces. Along the way, one principle became obvious:

LLMs are not price oracles; they are reasoning engines.

So the real question isn’t “Can an LLM predict the market?” The real question is:

Where in the trading lifecycle does reasoning create edge? To answer that, we

need to zoom

out and look at the entire cycle a trade travels through—from the first macro scan to the last

post-trade

debrief.

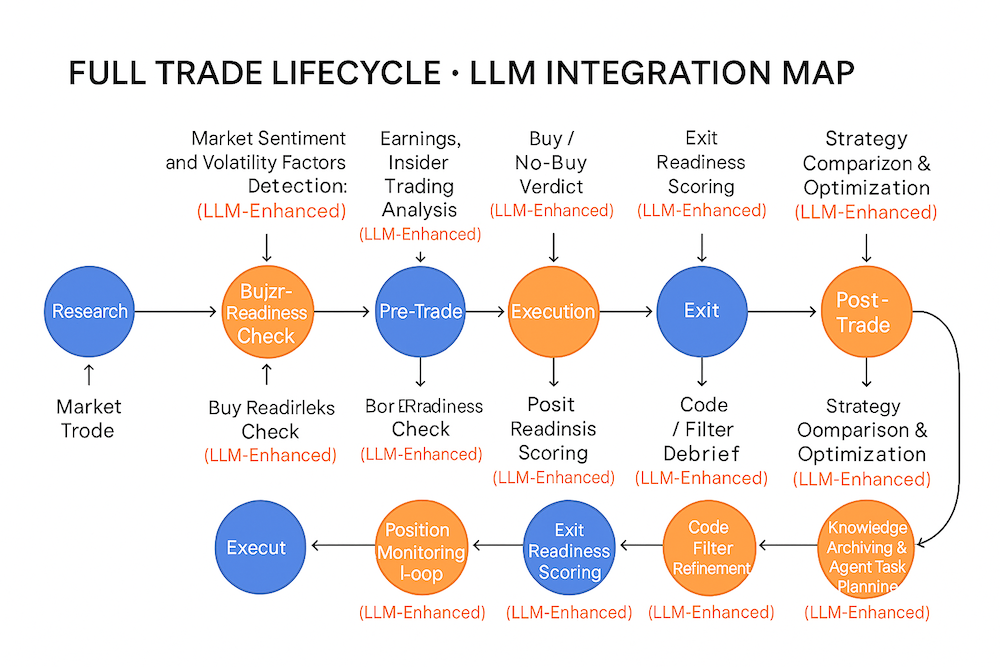

I. The 20-Stage Trade Lifecycle: A Circular Map for LLMs

A trade does not start when you press Buy or end when you press Sell. It lives

inside a

continuous loop: markets shift, strategies adapt, prompts evolve, risk rules tighten, and

insights feed back

into future trades. To reason about where LLMs belong, we built a full circular diagram of the

trade

lifecycle with twenty distinct stages.

Here is the same lifecycle in text form:

- Market Sentiment and Volalility Factors Detection: Identify

trend vs range, risk-on vs

risk-off,

and macro tone.

- Earnings, And Insider Trading Analysis: Interpret earnings, macro events,

insider trades, and political disclosures.

- LLM powered Strategies like Mean Reversions, Pullback, etc: Load the

algorithm, timeframe, objective, and risk

limits.

- Pre-Trade Risk Check: Evaluate account exposure,

correlation, liquidity,

and overnight risk.

- AI Prompt / Strategy Refinement: Rewrite LLM prompts using AI for LLM-based

strategies based on their performance and the data insights

- Buy Readiness Check: Evaluate the buy readiness based on the tickers

technicals, news sentiment whether the setup still

makes sense in the current context.

- Buy / No-Buy Verdict: Return a strict JSON verdict used by

the trading engine. Decides whether we enter a trade or not. Here's a deepdive Blog on this

- Position Monitoring Loop: Provide commentary and anomaly

detection while

the trade is open.

- Mid-Trade Risk Re-Assessment: Re-check catalysts, sentiment,

and regime

shifts.

- Exit Readiness Scoring: Evaluate whether the original thesis

has

decayed.

- Post-Trade Debrief: Summarize what worked, what failed, and

what to

adjust.

- Strategy Comparison & Optimization: Compare Strategy A vs

Strategy B on

the same symbol or window.

- Code / Filter Refinement:Backend Admin feature to evalute our algorithmic

logic using LLMs. Suggest method fixes, filter

improvements, and

new technical stacks.

- Knowledge Archiving & Agent Task Planning: Store prompts,

responses, and

generate engineering tasks for the next iteration.

This is what it actually means to “use LLMs in trading.” Not a chatbot pressing buy and

sell, but a

reasoning layer injected into specific points of the loop.

II. LLMs Are Not Price Oracles — They’re Context Engines

The first misconception we have to kill is the idea that LLMs are built to forecast price.

They’re not. These

models don’t compute probability distributions or optimize over historical time series the way a

statistical model does. Instead, they excel at something different: understanding and

transforming

context.

That means they can:

- Compress long earnings transcripts into concise, trade-relevant summaries.

- Explain why two strategies on the same symbol are producing different outcomes.

- Flag when a “perfect” technical setup conflicts with live macro or event risk.

- Generate code suggestions or filter improvements when your backtests show drift.

In other words, LLMs don’t replace your signal engine—they surround it with

intelligence.

The math proposes. The LLM evaluates, explains, and sometimes vetoes.

III. A Concrete Example: Automated Market Sentiment & Volatility Analysis

Before the system even looks at an individual ticker like TSLA or NVDA, it needs to establish a

"world view." Is the market currently Risk-On or Risk-Off? Are we in a trend or a chop? To

answer this, we don't rely on static indicators alone; we use LLMs to synthesize a macro picture

from news, volatility factors, and key index behavior.

Step 1: The Request (Controller & Job Dispatch)

We treat market sentiment generation as an asynchronous background task. The controller doesn't

block; it dispatches a job to the queue. This keeps the dashboard snappy while the LLM (e.g.,

Perplexity or GPT-4) crunches the data.

public function generateAIMarketSentiment($request)

{

$llmType = $request->llmType; // e.g., 'perplexity'

// 1. Build the prompt instructions dynamically

$aiPromptsModel = new AIPrompts;

$instructions = $aiPromptsModel->marketSentimentPrompt($userId) .

"<br> Format your response in proper html markup...";

// 2. Dispatch the heavy lifting to a queue

GenerateAIInsightsJob::dispatch([

'subject_type' => 'market_sentiment_analysis',

'prompt' => json_encode([]), // Or specific context data

'llm_type' => $llmType,

'instruction' => $instructions,

])->onQueue('low');

return $this->webResponse("AI Insights generation started", $request);

}

Step 2: The Contextual Prompt

The magic happens in how we ask. We don't just say "analyze the market." We give the LLM a

fallback mechanism. if specific news data isn't provided, it defaults to a "Magnificent 7"

proxy, ensuring we always have a baseline for market health.

"Generate a detailed analysis of market volatility factors for currently today and the upcoming 4 weeks.

**If no specific data is provided, default to a 'Magnificent 7' focused analysis.**

The 'Magnificent 7' stocks are: AAPL, GOOGL, MSFT, AMZN, NVDA, TSLA, META.

Clearly identify and elaborate on the top 5 factors that will affect market volatility..."

Step 3: Integrating the Result

Once the job completes and saves the insight, the frontend retrieves it via a dedicated endpoint.

The LLM has already formatted the output as HTML (as requested in Step 1), so it plugs directly

into the trader's view.

public function getAIMarketVolatilityFactorsData($request)

{

$marketNewsModel = new MarketNews();

// Retrieve the stored analysis

$latestVolatility = $marketNewsModel->latestMarketVolatilityFactorsAnalysis($request->llmType);

// Return the view fragment

return view('/base/markets/housetrading/ajax/ai-market-volatility-factors-ajax', [

'marketVolatilityFactorsAnalysis' => $latestVolatility,

]);

}

By automating this, we continuously maintain a "Market Volatility" dashboard tile that identifies

trend vs range and macro tone—automatically adjusting our algorithm's risk profiles without

manual intervention.

IV. Context Engineering: Feeding the Model Like a Trader, Not a Journalist

If you’ve played with generative AI, you already know the basic rule:

garbage in, garbage out. In trading, “garbage” usually means one of two things:

irrelevant data or

unbounded instructions.

Our systems do the opposite:

- We feed only the latest, relevant indicators—not 20 years of stale fundamentals.

- We compress news down to a few bullet points and risk labels.

- We hard-code instructions that force the model to answer in JSON with specific keys.

This is what gives LLM decisions weight. They aren’t hallucinating stories; they are constrained

consultants

reviewing a curated context window with a very specific job: “Does this trade make sense

right

now?”

V. The Hard Boundary: LLMs Advise, Deterministic Code Executes

There’s one non-negotiable rule if you want to use LLMs in live markets without getting wrecked:

The LLM is allowed to influence if a trade happens.

It is never allowed to decide how it happens.

That means:

- Risk rules, position sizing, and stops are completely deterministic.

- The LLM cannot override exposure or capital limits.

- Execution is handled by strict, testable code paths—not AI.

This separation of concerns is what turns LLMs from a toy into an actual trading edge. The model

thinks; the

engine executes; the risk layer enforces discipline.

VI. So… Can You Actually Use LLMs in Trading?

Yes—but only if you stop asking them to be fortune-tellers and start using them as what they

truly are:

high-bandwidth reasoning partners embedded at the right stages of the trade

lifecycle.

In our systems, LLMs:

- Summarize the market regime and volatility landscape each day.

- Interpret insider activity, Senate/House trades, and political disclosures.

- Provide buy/sell/hold readiness scores for live strategies.

- Generate post-trade coaching reports and strategy comparisons.

- Suggest method and filter improvements—and even draft code for GitHub PRs.

What they never do is place an order, move a stop, or gamble with capital on their own.

That is the real answer to the question, “Can you actually use LLMs in trading?”

Not by handing them your account and hoping they’re right—but by embedding them into a

disciplined,

circular lifecycle where every decision, explanation, and veto is logged, auditable, and

constrained by

hard rules.

In that environment, LLMs stop being a buzzword and become what they should have been all along:

a durable edge in how you think, not a shortcut in what you gamble on.